Gan Wang, Piotr Nowakowski, Nima Farahmand Bafi, Benjamin Midtvedt, Falko Schmidt, Ruggero Verre, Mikael Käll, S. Dietrich, Svyatoslav Kondrat, Giovanni Volpe

arXiv: 2401.06260

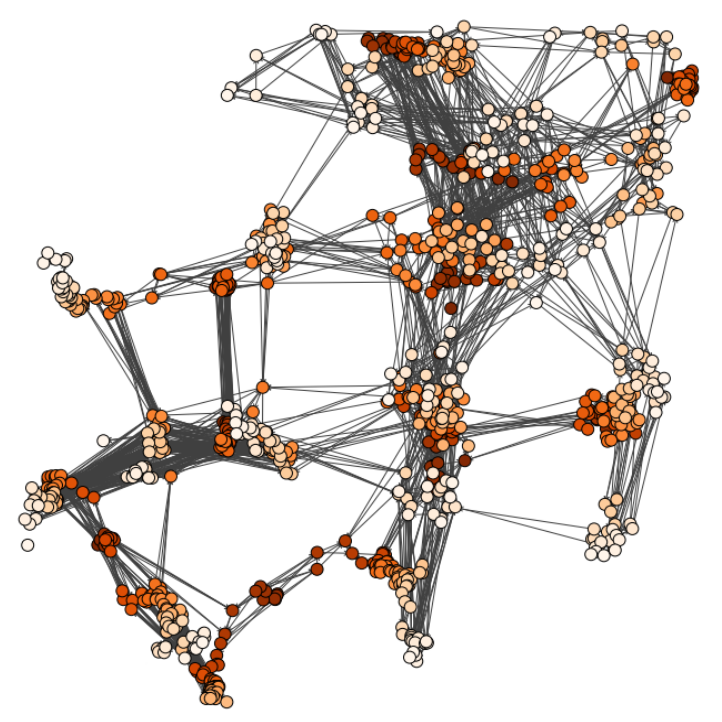

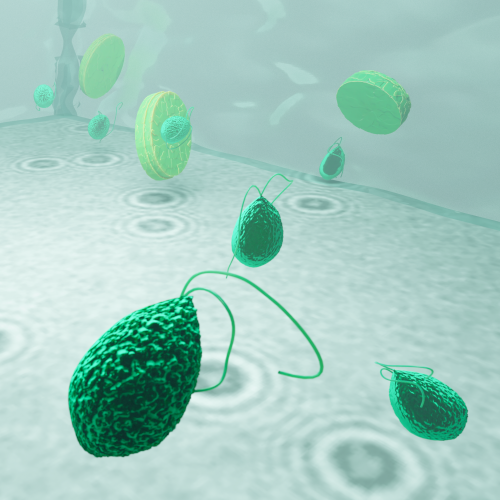

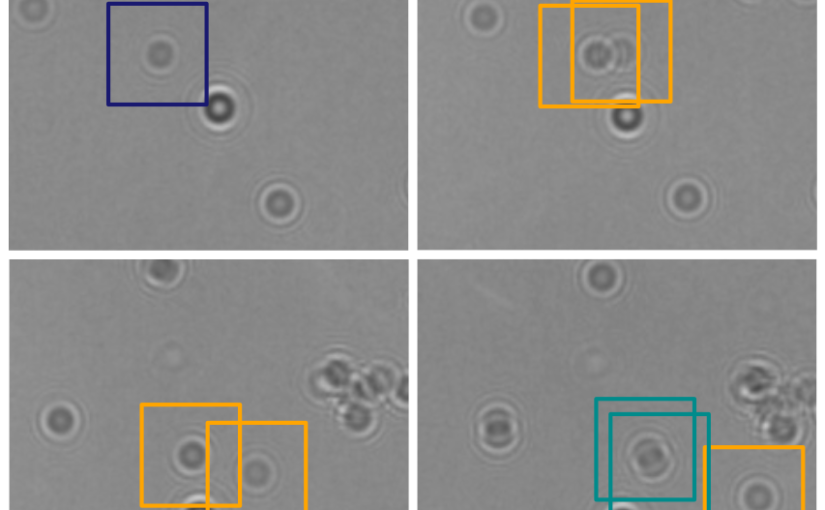

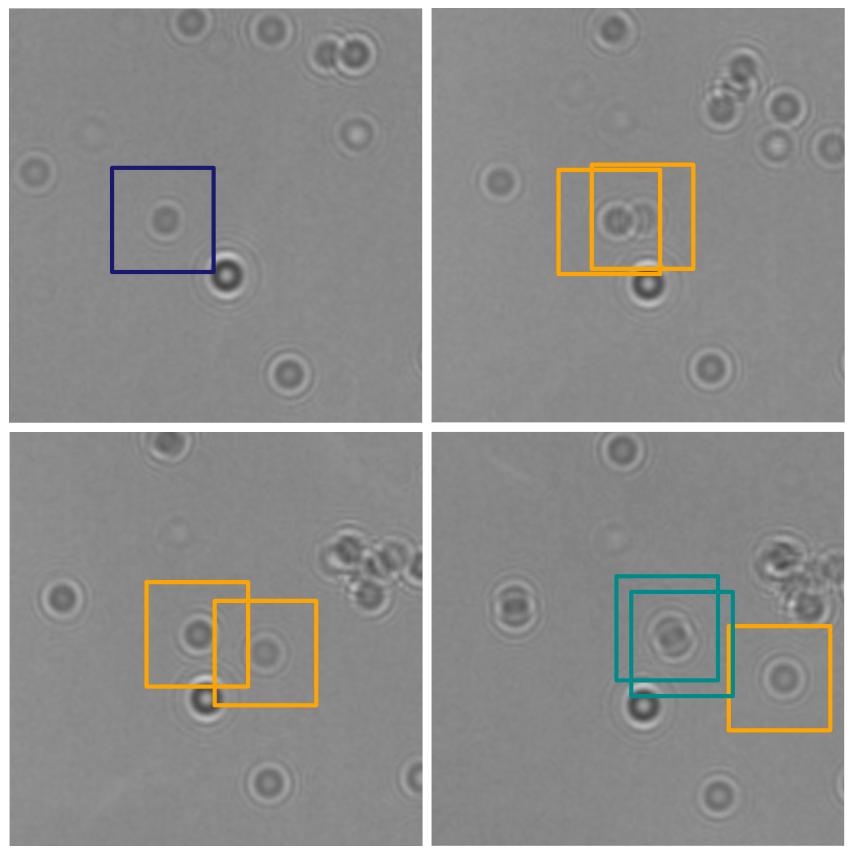

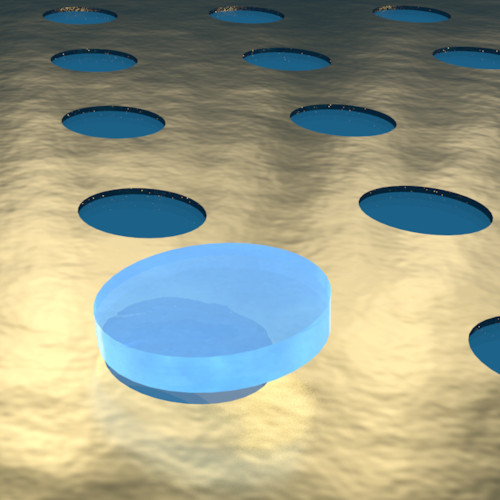

The manipulation of microscopic objects requires precise and controllable forces and torques. Recent advances have led to the use of critical Casimir forces as a powerful tool, which can be finely tuned through the temperature of the environment and the chemical properties of the involved objects. For example, these forces have been used to self-organize ensembles of particles and to counteract stiction caused by Casimir-Liftshitz forces. However, until now, the potential of critical Casimir torques has been largely unexplored. Here, we demonstrate that critical Casimir torques can efficiently control the alignment of microscopic objects on nanopatterned substrates. We show experimentally and corroborate with theoretical calculations and Monte Carlo simulations that circular patterns on a substrate can stabilize the position and orientation of microscopic disks. By making the patterns elliptical, such microdisks can be subject to a torque which flips them upright while simultaneously allowing for more accurate control of the microdisk position. More complex patterns can selectively trap 2D-chiral particles and generate particle motion similar to non-equilibrium Brownian ratchets. These findings provide new opportunities for nanotechnological applications requiring precise positioning and orientation of microscopic objects.