Introduction to G-Research, a quantitative research and technology company

Charles Martinez

G-Research, London, UK

27 March 2024

12:30-14:30

PJ

Organized by the CHAIR theme AI for Scientific Data Analysis

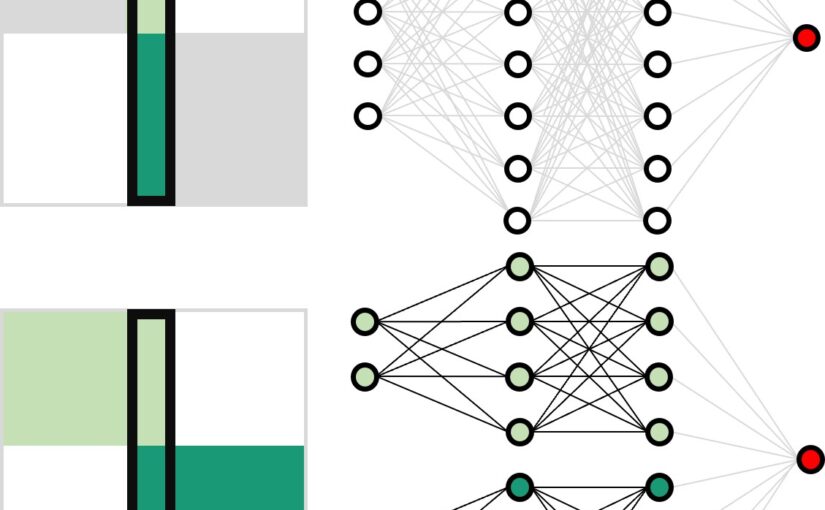

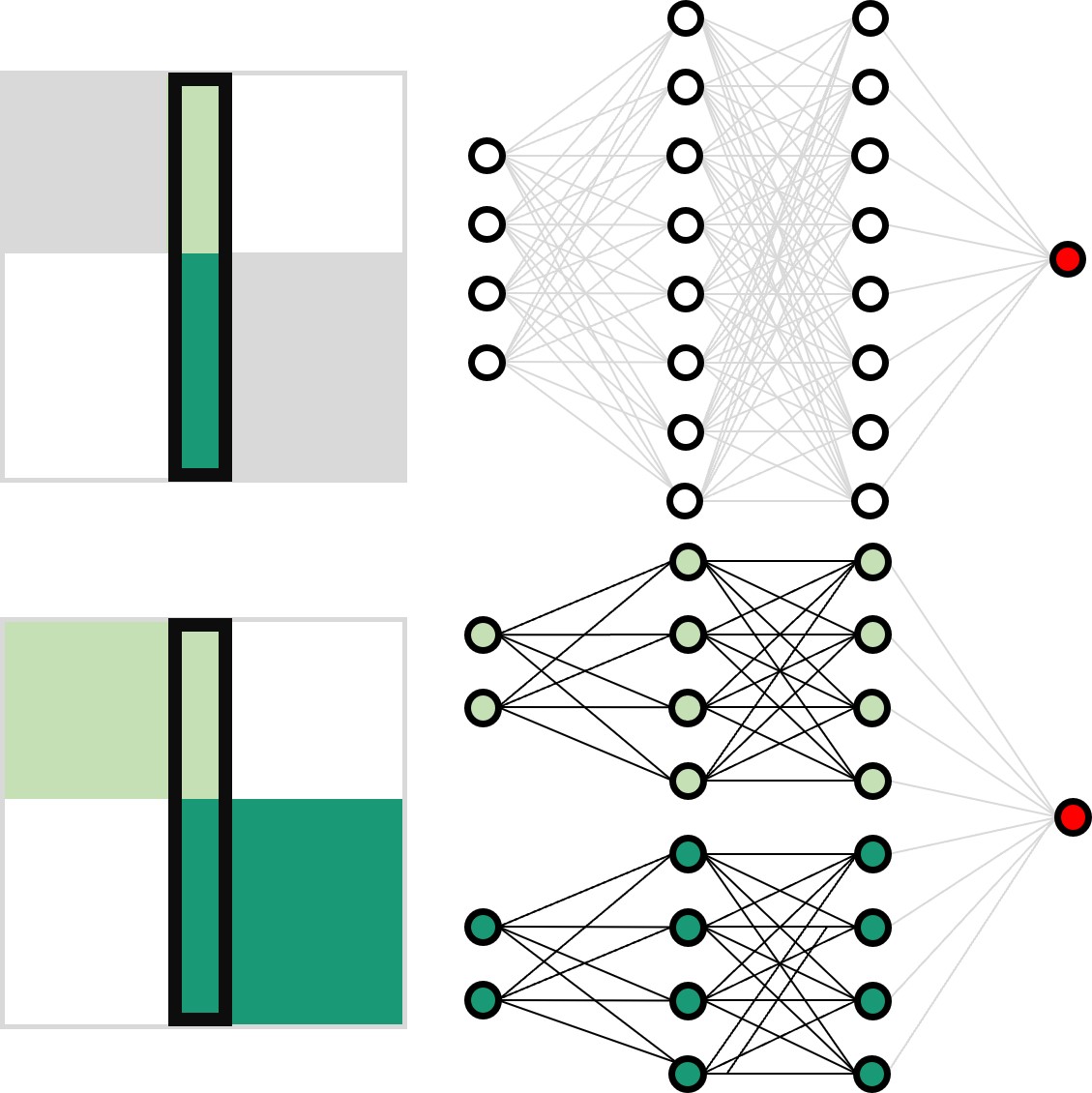

We are a leading quantitative research and technology company based in London. Day to day we use a variety of quantitative techniques to predict financial markets from large data sets worldwide. Mathematics, statistics, machine learning, natural language processing and deep learning is what our business is built on. Our culture is academic and highly intellectual. In this seminar I will explain our background, current AI research applications to finance and our ongoing outreach, recruitment and grants programme.

Bio: Dr Charles Martinez is the Academic Relations Manager at G-Research. Charles started his studies as a physicist at University Portsmouth Physics department’s MPhys programme, and later completed a PhD in Phonon interactions in Gallium Nitride nanostructures at the University of Nottingham. Charles then worked on indexing and abstract databases at the Institution for Engineering and Technology (IET) before moving into sales in 2010. Charles’ previous role was as Elsevier’s Key Account Manager, managing sales and renewals for the UK Russell Group institutions, Government and Funding body accounts, including being one of the negotiators in the recent UK ScienceDirect Read and Publish agreement. Since leaving Elsevier Charles is dedicated to forming beneficial partnerships between G-Research and Europe’s top institutions, and is living in Cambridge, UK.