Emiliano Gómez will defend his PhD thesis on the 22th of May at 10:30. The defense will take place in KA (Chemistry Department, Johanneberg Campus)

Title: Development and Application of a software to analyse networks with multilayer graph theory and deep learning

Abstract:

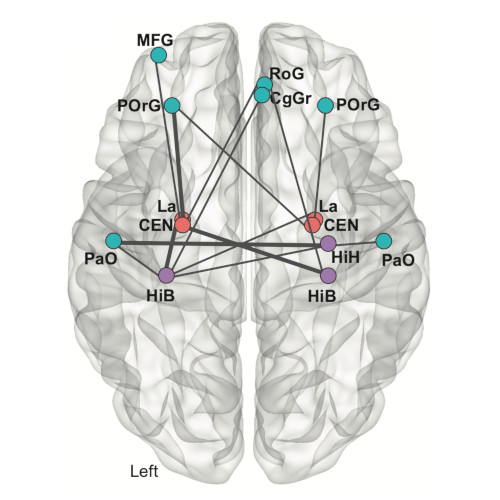

Network theory gives us the tools necessary to produce a model of our brain, how the brain is wired will give us a new level of insight into its functionality. The brain network, the connectome, is formed by structural links such as synapses or fiber pathways in the brain. This connectome might also be interpreted from a statistical relationship between the flow of information, or activation correlation between brain regions. Mapping these networks can be achieved by using neuroimaging, which allows obtaining information on the brain in vivo. Different neuroimaging modalities will capture different properties of the brain. Statistical analysis is necessary for extracting meaningful insights regarding the network patterns obtained from neuroimages. For this, huge data banks are a byproduct of the need for enough data to be able to tackle medical and biological questions.

In this work, we present a software “Brain Analysis using Graph Theory 2” (BRAPH 2) (Paper I), which addresses the need for a toolbox designed for both complex graph theory and deep learning analyses of different imaging modalities. With BRAPH 2, we offer the neuroimaging community a tool that is open-source, flexible, and intuitive. BRAPH 2, at its core, comes with multi-graph capabilities. For Paper II, we employed the power of multiplex and multigraph capabilities of BRAPH 2 to analyze sex differences in brain connectivity for an aging healthy population. Finally, for Paper III, BRAPH 2 has been adapted to two new graph measures (global memory capacity, and nodal memory capacity), which obtain a prediction of memory capacity using Reservoir Computing and relate this new measure to biological and cognitive characteristics of the cohort.

Supervisor: Giovanni Volpe

Examiner: Raimund Feifel

Opponent: Saikat Chatterjee, KTH, Stockholm

Committee: Marija Cvijovic, Alireza Salami, Wojciech Chachólski

Alternate board member: Mats Granath