The defense will take place in PJ, Institutionen för fysik, Origovägen 6b, Göteborg, at 13:00.

Title: Annotation-free deep learning for quantitative microscopy

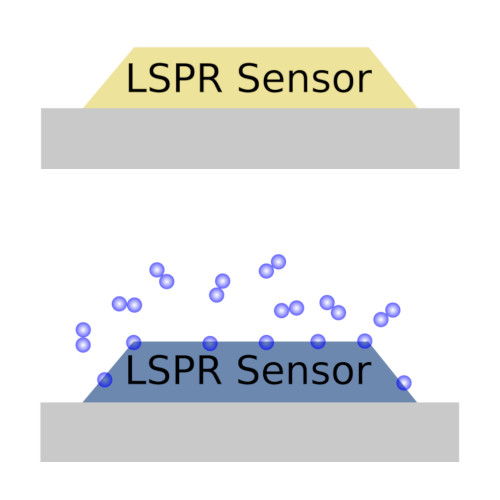

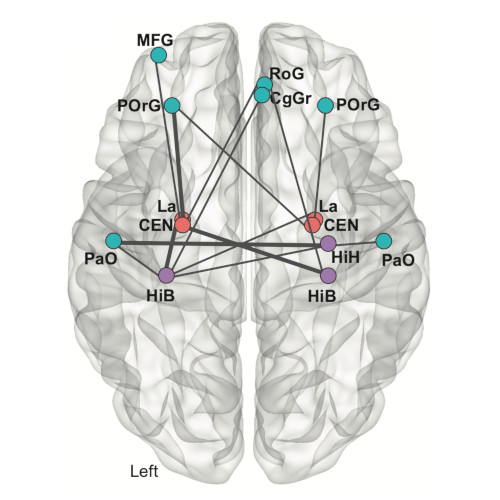

Abstract: Quantitative microscopy is an essential tool for studying and understanding microscopic structures. However, analyzing the large and complex datasets generated by modern microscopes presents significant challenges. Manual analysis is time-intensive and subjective, rendering it impractical for large datasets. While automated algorithms offer faster and more consistent results, they often require careful parameter tuning to achieve acceptable performance, and struggle to interpret the more complex data produced by modern microscopes. As such, there is a pressing need to develop new, scalable analysis methods for quantitative microscopy. In recent years, deep learning has transformed the field of computer vision, achieving superhuman performance in tasks ranging from image classification to object detection. However, this success depends on large, annotated datasets, which are often unavailable in microscopy. As such, to successfully and efficiently apply deep learning to microscopy, new strategies that bypass the dependency on extensive annotations are required. In this dissertation, I aim to lower the barrier for applying deep learning in microscopy by developing methods that do not rely on manual annotations and by providing resources to assist researchers in using deep learning to analyze their own microscopy data. First, I present two cases where training annotations are generated through alternative means that bypass the need for human effort. Second, I introduce a deep learning method that leverages symmetries in both the data and the task structure to train a statistically optimal model for object detection without any annotations. Third, I propose a method based on contrastive learning to estimate nanoparticle sizes in diffraction-limited microscopy images, without requiring annotations or prior knowledge of the optical system. Finally, I deliver a suite of resources that empower researchers in applying deep learning to microscopy. Through these developments, I aim to demonstrate that deep learning is not merely a “black box” tool. Instead, effective deep learning models should be designed with careful consideration of the data, assumptions, task structure, and model architecture, encoding as much prior knowledge as possible. By structuring these interactions with care, we can develop models that are more efficient, interpretable, and generalizable, enabling them to tackle a wider range of microscopy tasks.

Thesis: https://hdl.handle.net/2077/84178

Supervisor: Giovanni Volpe

Examiner: Dag Hanstorp

Opponent: Ivo Sbalzarini

Committee: Susan Cox, Maria Arrate Munoz Barrutia, Ignacio Arganda-Carreras

Alternate board member: Måns Henningson