Label-free measurement of biomolecules and their diffusion

Giovanni Volpe

9 September 2022, 16:45 (CEST)

Venice meeting on Fluctuations in small complex systems VI

Istituto Veneto di Scienze, Lettere ed Arti

Palazzo Franchetti, Venezia, 5-9 September 2022

News

Marcel Rey won best oral presentation at ECIS, Chania

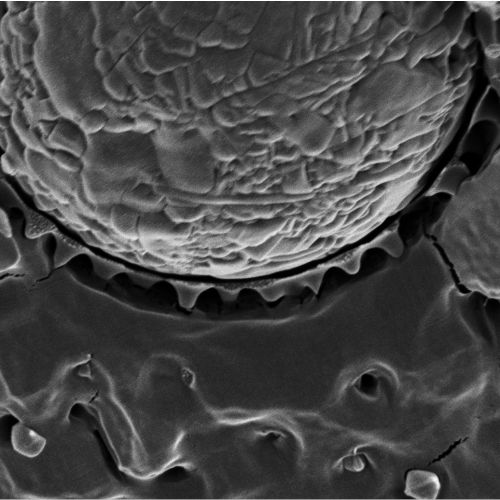

In the talk, Marcel presented his recent work on the destabilisation mechanism of temperature-responsive emulsions. He demonstrated that the presence or absence of stimuli-responsive emulsion behaviour is linked to the characteristic microstructure of the stabilising microgel particles. Surprisingly, only emulsions where the microgels are in a double-corona morphology show stimuli-responsive behaviour while emulsions stabilised with microgels in a single-corona morphology remain insensitive to temperature.

Presentation by M. Rey at ECIS 2022, Chania, 04 September 2022

Marcel Rey

Submitted to ECIS 2022

Date: 05 September 2022

Time: 16:40 (CET)

Temperature-responsive microgel-stabilized emulsions combine long-term storage with controlled release of the encapsulated liquid upon temperature increase. The destabilisation mechanism was previously primarily attributed to the shrinkage or desorption of the temperature-responsive microgels, leading to a lower surface coverage inducing coalescence.

Here, we link the macroscopic emulsion stability to the thermo-responsive behaviour and microstructure of individual microgels confined at liquid interfaces and demonstrate that the breaking mechanism is fundamentally different to that previously anticipated. Breaking of thermoresponsive emulsions is induced via bridging points in flocculated emulsions, where microgels are adsorbed to two oil droplets. These bridging microgels induce an attractive force onto both interfaces when heated above their volume phase transition temperature, which induces coalescence. Surprisingly, if such bridging points are avoided by low shear emulsification, the obtained emulsion is insensitive to temperature and remains stable even up to 80 °C.

Hang Zhao joins the Soft Matter Lab

Hang has a Master’s degree in Biomedical Engineering from Linköping University, where he focused on machine learning and medical image processing.

In his PhD, he will focus on machine learning, graph theory, and neuroscience.

Martin Selin presented his half-time seminar on 2 September 2022

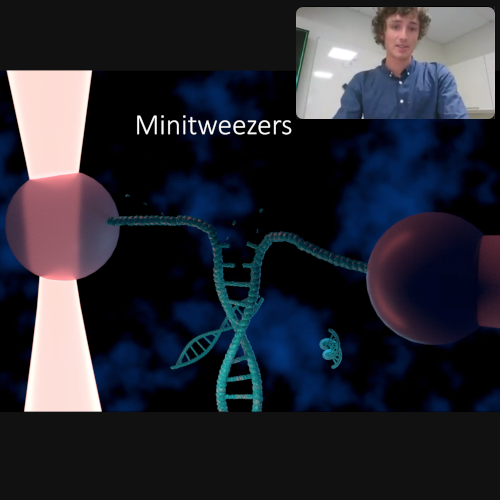

The presentation was held in hybrid format, with part of the audience in the Von Bahr room and the rest connected through zoom. The half-time consisted of a presentation of Martins two main projects followed by a discussion and questions proposed by Martins opponent Dag Hanstorp.

The presentation started providing a background on optical tweezers and continued with the ongoing project of positioning quantum dots using optical tweezers. Thereafter the presentation continued with the Minitweezers project. Data on DNA stretching was presented and shown to be in good agreement with results found in literature. Lastly the future of the two projects were outlined. Specifically, how to address the challenging task of detecting moving quantum dots and how to improve on the Minitweezers system through automation.

Gideon Jägenstedt joins the Soft Matter Lab

Gideon is a Master student in the Complex Adaptive Systems at Chalmers University of Technology.

During his time at the Soft Matter Lab, he will work on his Master thesis project on particle representation and graph neural networks.

An anomalous competition: assessment of methods for anomalous diffusion through a community effort

An anomalous competition: assessment of methods for anomalous diffusion through a community effort

Carlo Manzo, Giovanni Volpe

Submitted to SPIE-ETAI

Date: 25 August 2022

Time: 9:00 (PDT)

Deviations from the law of Brownian motion, typically referred to as anomalous diffusion, are ubiquitous in science and associated with non-equilibrium phenomena, flows of energy and information, and transport in living systems. In the last years, the booming of machine learning has boosted the development of new methods to detect and characterize anomalous diffusion from individual trajectories, going beyond classical calculations based on the mean squared displacement. We thus designed the AnDi challenge, an open community effort to objectively assess the performance of conventional and novel methods. We developed a python library for generating simulated datasets according to the most popular theoretical models of diffusion. We evaluated 16 methods over 3 different tasks and 3 different dimensions, involving anomalous exponent inference, model classification, and trajectory segmentation. Our analysis provides the first assessment of methods for anomalous diffusion in a variety of realistic conditions of trajectory length and noise. Furthermore, we compared the prediction provided by these methods for several experimental datasets. The results of this study further highlight the role that anomalous diffusion has in defining the biological function while revealing insight into the current state of the field and providing a benchmark for future developers.

Presenter: Giovanni Volpe

Presentation by Y.-W. Chang at SPIE-ETAI, San Diego, 24 August 2022

Yu-Wei Chang, Giovanni Volpe, Joana B Pereira

Submitted to SPIE-ETAI

Date: 24 August 2022

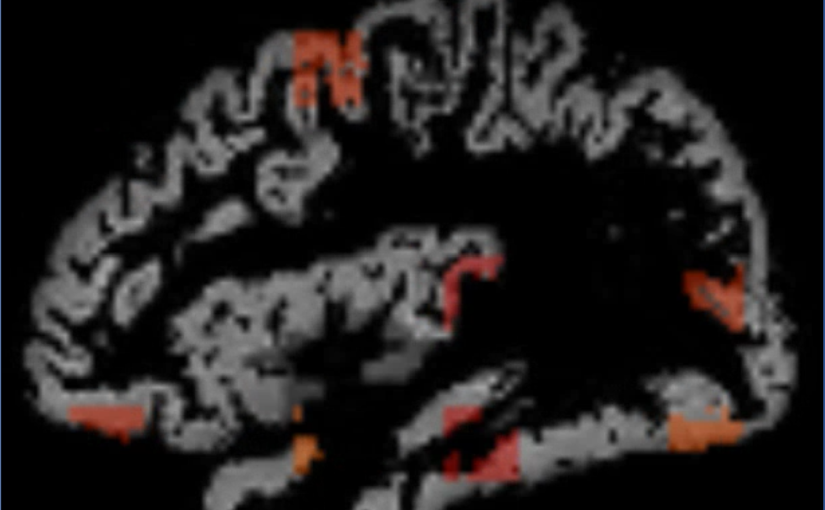

Time: 16:40 (PDT)

Previous studies have suggested that Alzheimer’s disease (AD) is typically characterized by abnormal accumulation of tau proteins in neurofibrillary tangles. This is usually assessed by measuring tau levels in regions of interest (ROIs) defined based on previous post-mortem studies. However, it remains unclear where this approach is suitable for assessing tau accumulation in vivo across the different stages of individuals. This study employed a data-driven deep learning approach to detect tau deposition across different AD stages at the voxel level. Moreover, the classification performance of this approach on distinguishing different AD stages was compared with the one using conventional ROIs.

Presentation by A. Ciarlo at SPIE-OTOM, San Diego, 24 August 2022

Periodic feedback effect in counterpropagating intracavity optical tweezers

Antonio Ciarlo, Giuseppe Pesce, Fatemeh Kalantarifard, Parviz Elahi, Agnese Callegari, Giovanni Volpe, Antonio Sasso

Submitted to SPIE-OTOM

Date: 24 August 2022

Time: 14:00 (PDT)

Intracavity optical tweezers are a powerful tool to trap microparticles in water using the nonlinear feedback effect produced by the particle motion when it is trapped inside the laser cavity. In such systems two configurations are possible: a single-beam configuration and counterpropagating one. A removable isolator allows to switch between these configurations by suppressing one of the beams. Trapping a particle in the counterpropagating configuration, the measure of the optical power shows a feedback effect for each beam, that is present also when the two beams are misaligned and the trapped particle periodically jumps between them.

Presentation by H. Bachimanchi at SPIE-ETAI, San Diego, 24 August 2022

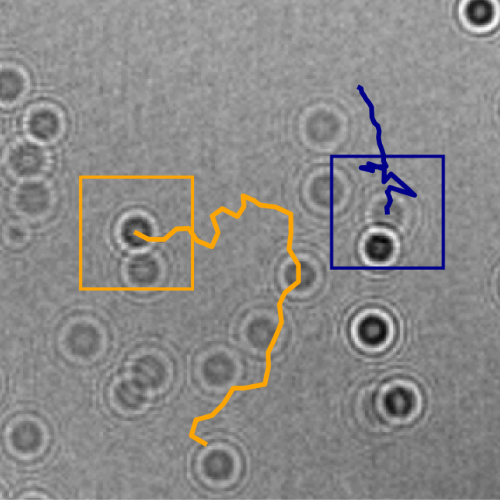

Harshith Bachimanchi Benjamin Midtvedt, Daniel Midtvedt, Erik Selander, and Giovanni Volpe

Presentation at SPIE-ETAI 2022

San Diego, USA

24 August 2022, 11:45 PDT

The marine microbial food web plays a central role in the global carbon cycle. Our mechanistic understanding of the ocean, however, is biased towards its larger constituents, while rates and biomass fluxes in the microbial food web are mainly inferred from indirect measurements and ensemble averages.Yet, resolution at the level of the individual microplankton is required to advance our understanding of the oceanic food web. Here, we demonstrate that, by combining holographic microscopy with deep learning, we can follow microplanktons through generations, continuously measuring their three dimensional position and dry mass. The deep learning algorithms circumvent the computationally intensive processing of holographic data and allow inline measurements over extended time periods. This permits us to reliably estimate growth rates, both in terms of dry mass increase and cell divisions, as well as to measure trophic interactions between species such as predation events. The individual resolution provides information about selectivity, individual feeding rates and handling times for individual microplanktons. This method is particularly useful to explore the flux of carbon through microzooplankton, the most important and least known group of primary consumers in the global oceans. We exemplify this by detailed descriptions of microzooplankton feeding events, cell divisions, and long term monitoring of single cells from division to division.