Alex Lech, Mirja Granfors, Benjamin Midtvedt, Jesús Pineda, Harshith Bachimanchi, Carlo Manzo, Giovanni Volpe

BNMI 2025, 19-22 August 2025, Gothenburg, Sweden

Date: 20 August 2025

Time: 15:15-19:00

Place: Wallenberg Conference Centre

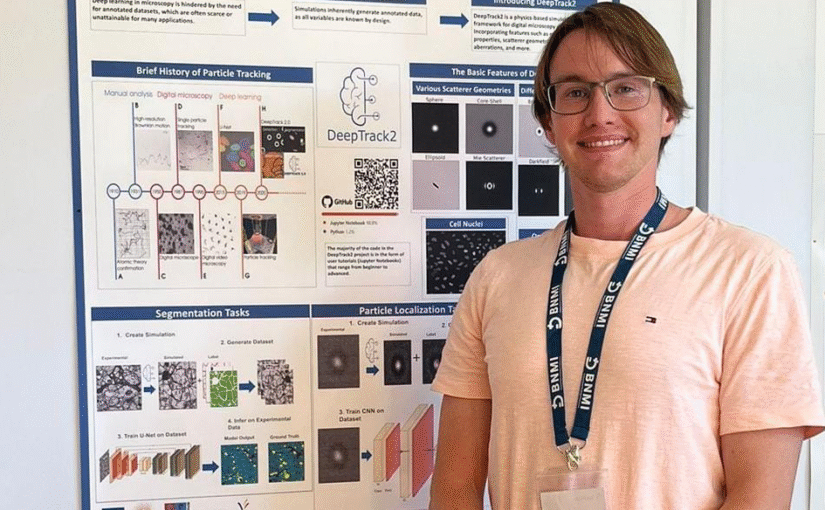

DeepTrack2 is a flexible and scalable Python library designed for simulating microscopy data to generate high-quality synthetic datasets for training deep learning models. It supports a wide range of imaging modalities, including brightfield, fluorescence, darkfield, and holography, allowing users to simulate realistic experimental conditions with ease. Its modular architecture enables users to customize experimental setups, simulate a variety of objects, and incorporate optical aberrations, realistic experimental noise, and other user-defined effects, making it suitable for various research applications. DeepTrack2 is designed to be an accessible tool for researchers in fields that utilize image analysis and deep learning, as it removes the need for labor-intensive manual annotation through simulations. This helps accelerate the development of AI-driven methods for experiments by providing large volumes of data that is often required by deep learning models. DeepTrack2 has already been used for a number of applications in cell tracking, classifications tasks, segmentations and holographic reconstruction. Its flexible and scalable nature enables researchers to simulate a wide array of experimental conditions and scenarios with full control of features and parameters.

DeepTrack2 is available on GitHub, with extensive documentation, tutorials, and an active community for support and collaboration at https://github.com/DeepTrackAI/DeepTrack2.

References:

Digital video microscopy enhanced by deep learning.

Saga Helgadottir, Aykut Argun & Giovanni Volpe.

Optica, volume 6, pages 506-513 (2019).

Quantitative Digital Microscopy with Deep Learning.

Benjamin Midtvedt, Saga Helgadottir, Aykut Argun, Jesús Pineda, Daniel Midtvedt & Giovanni Volpe.

Applied Physics Reviews, volume 8, article number 011310 (2021).