Yu-Wei Chang, Laura Natali, Oveis Jamialahmadi, Stefano Romeo, Joana B Pereira, Giovanni Volpe

Submitted to SPIE-ETAI

Date: 24 August 2022

Time: 08:00 (PDT)

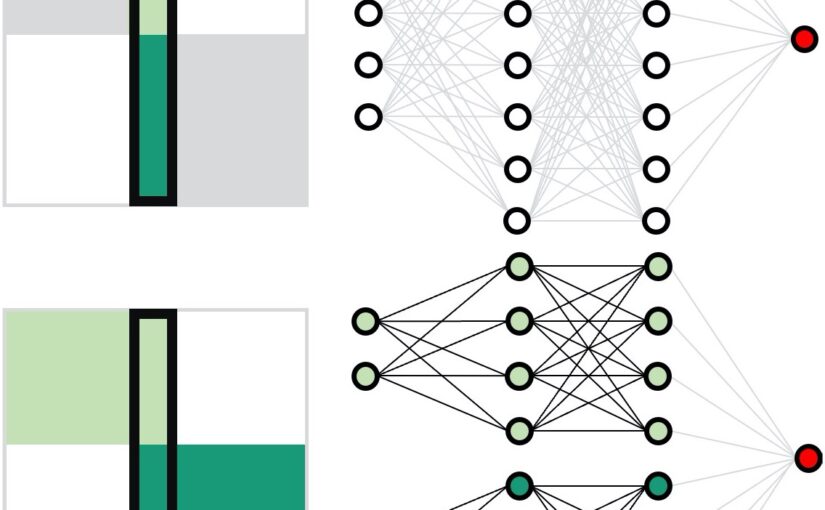

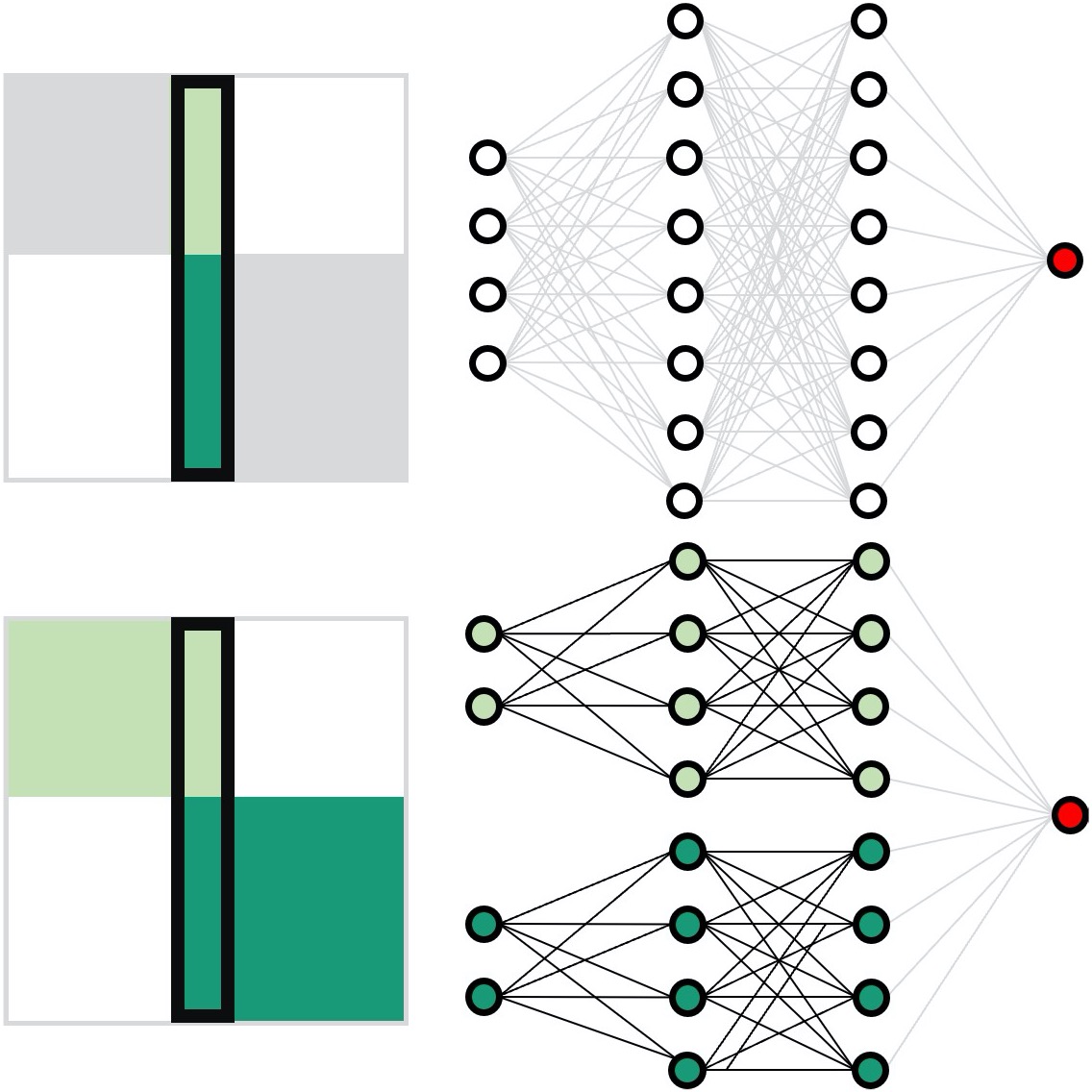

Neural network training and validation rely on the availability of large high-quality datasets. However, in many cases, only incomplete datasets are available, particularly in health care applications, where each patient typically undergoes different clinical procedures or can drop out of a study. Here, we introduce GapNet, an alternative deep-learning training approach that can use highly incomplete datasets without overfitting or introducing artefacts. Using two highly incomplete real-world medical datasets, we show that GapNet improves the identification of patients with underlying Alzheimer’s disease pathology and of patients at risk of hospitalization due to Covid-19. Compared to commonly used imputation methods, this improvement suggests that GapNet can become a general tool to handle incomplete medical datasets.